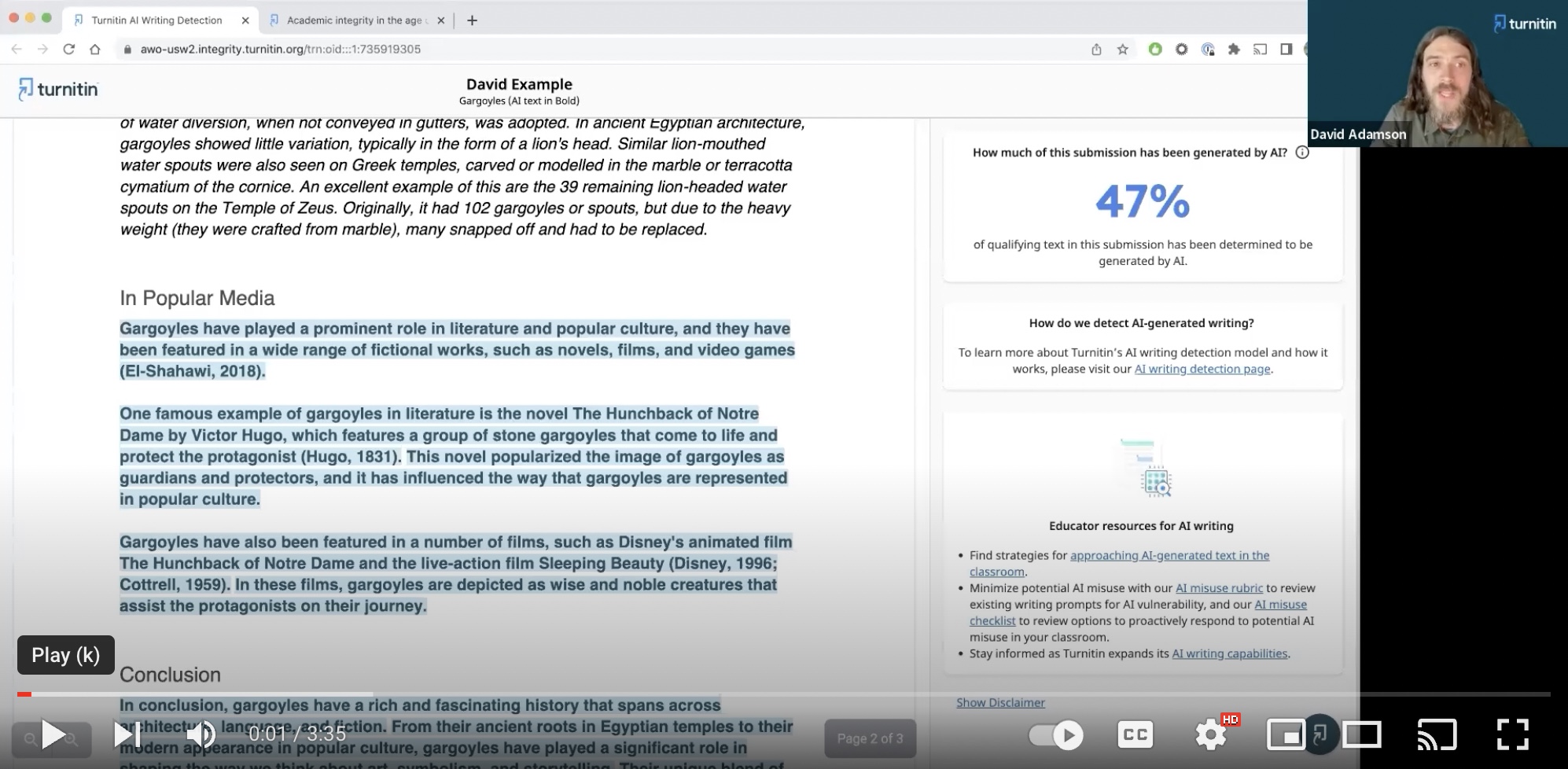

Recently, I shared an update on what we’ve learned since we launched the Preview for AI writing detection. In the same article, I highlighted that when it comes to AI writing detection metrics, there is a difference between sentence- and document-level metrics.

Our document false positive rate - incorrectly identifying fully human-written text as AI-generated within a document- is less than 1% for documents with 20% or more AI writing.

Our sentence-level false positive rate is around 4%. This means that there is a 4% likelihood that a specific sentence highlighted as AI-written might be human-written. The incidence for this is more common in documents that contain a mix of human- and AI-written content, particularly in the transitions between human- and AI-written content.

As explained in my earlier article, there is a correlation between these sentences and their proximity in the document to actual AI writing. 54% of the time, these sentences are located right next to actual AI writing.

Watch this short video where David Adamson, an AI scientist at Turnitin and a former high school teacher, explains more about false positives in a sentence.

While we cannot mitigate the risk of false positives completely given the nature of AI writing and analysis, we believe that by being transparent and helping instructors understand what our metrics mean and how to use them, we can enable them to use the data meaningfully.

Here are a few tips that can help you further understand how to use our AI detection metrics:

- Remember that there is no “right” or “target” score with the AI writing indicator, just like with a Similarity Score.

- Always dig deeper and analyze it in conjunction with your institution’s policies on the use of AI writing tools, in coordination with the assignment’s rubric.

- Consider highlighted sentences as areas of interest because they’re predicted to be close to where AI writing is present. But a small percentage of times, the AI model could get it wrong. So, use the information to initiate a conversation, not to draw a conclusion.

- Engage with your student if you have doubts about the authenticity of the submission. Ask them to explain what the essay is about, the process they followed, their ideas and key takeaways, among other things. You can use this guide on how to have an honest, open dialogue with students regarding their work.

Additional resources:

For educators:

- Handling false positives for educators: False positives are not common, but when they occur, the conversation can be challenging. Use this guide to think about conversations before and after a submission.

- Approaching a student regarding potential AI misuse: An honest, open dialogue with students regarding their work is the first step. Use this guide to help to diffuse a potentially difficult interaction.

For students:

- Handling false positives for students: When an instructor approaches a student regarding a potential false positive, this guide can help students prepare for that conversation.

- Ethical AI use checklist for students: This checklist offers guidelines to enable students to uphold their own academic integrity.

For more on false positives, read our previous blog Understanding false positives within our AI writing detection capabilities.