As a former educator, I recall those times when–much like my secondary English Language Arts students–I wanted a simple right or wrong answer to questions. Sadly, these types of responses are rare in spite of their comforting nature. Generative AI writing tools and usage of Turnitin’s AI writing indicator have one thing in common: there is no one “right” approach. An “appropriate” approach may be a more accurate term as determining generative AI parameters may vary greatly from one assignment to another, or from one student to another. Determining the significance of the AI writing score for and with your students is a key step in helping students navigate the world of generative AI writing tools.

The unknown is always frightening and overwhelming. Let's start with what IS known and use that as a framework for understanding the new.

How does the AI writing indicator compare to the Similarity Score?

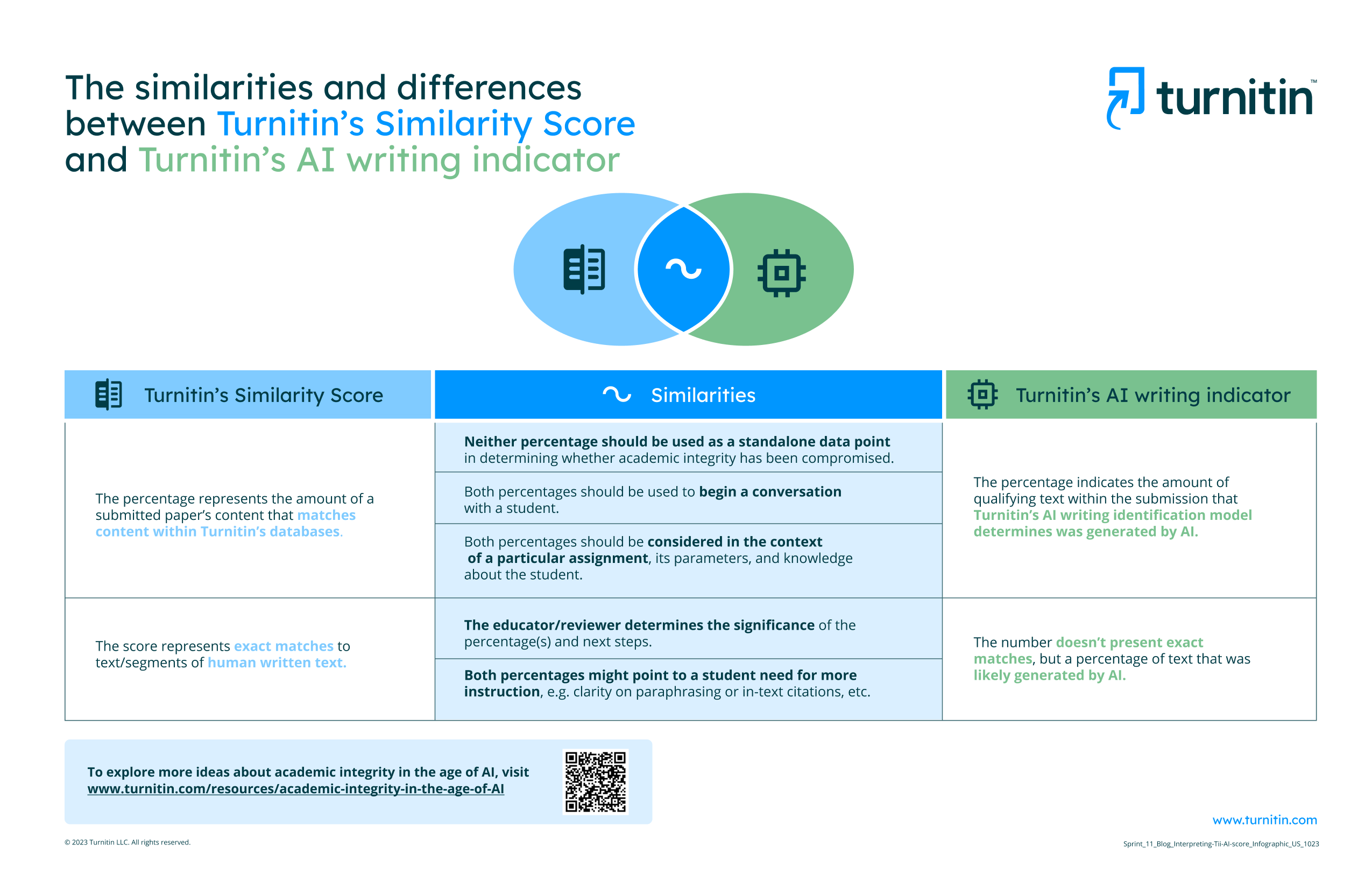

Linking new ideas to existing knowledge is a strong strategy, so let’s begin with the Turnitin Similarity Score, already familiar to many educators. While there are some significant differences between the two scores, the need for nuance and context is a common element. Veteran Turnitin users know that setting an arbitrary percentage for the Similarity Score without considering the assignment or student lessens the likelihood that this information will be used to inform a meaningful conversation about the student’s writing.

Perhaps the most significant difference between these two percentages is how they are determined. While Turnitin’s Similarity Score is an exact match to a large body of content for similarity checking, the AI writing score indicates the percentage of text in a document that was likely generated by an AI tool (See #9 of AI detection results and interpretation). This difference makes some uncomfortable because the AI writing score is a seeming gray area, but shifting that perspective is necessary to harness the power of this information.

The fact that an exact match isn’t identified does not make the information less valuable. In fact, it makes little difference when used as intended: as a single data point that informs the educator’s understanding of the student’s thinking and work. The educator’s own knowledge of the student and their work is as crucial to understanding the AI writing score as the percentage itself. In and of itself, the score cannot stand alone;it is arbitrary and less meaningful without an educator’s sense of the assignment, the student, and overall context.

We at Turnitin encourage educators to take a similar approach to the AI writing score as we recommend for interpreting the Similarity Score. Ideally, this is step one of a conversation that takes place in the formative space, rather than being used as a summative or punitive measure. When the “score”--Similarity or AI writing–is looked upon as the starting point for a dialogue rather than a definitive statement about a student’s work, then these conversations take on vigorous purpose as an opportunity for learning.

To that end, our team of veteran educators developed a helpful resource to support educators and academic decision makers who are looking to understand the similarities and differences between Turnitin's Similarity Score and Turnitin's AI writing indicator. Below, you'll find a downloadable infographic that spells out how each tool can and should be used to responsibly support original student work.

The case for not setting a single AI writing score for a course

While some might argue that a “one score fits all” approach is fair, a closer look at assignment and student dynamics makes this patently untrue. In the interests of fairness and equity, educators should consider multiple factors when reviewing student writing:

- Genre of the assignment. When reviewing a creative or personal narrative, a lower AI writing score would be more expected as original thinking that is personal to the student is expected. When reviewing a research-based piece of writing, a lower AI writing score might indicate a lack of cited evidence to back up the student’s claims, whereas a higher score might indicate a more appropriate level of evidence to back up the student’s claims, or it may reveal some intended or unintended misconduct. A conversation with the student during a review of the work can help the educator determine what happened, why, and what next steps might be.

- Length of an assignment. When reviewing a briefer essay versus a longer research paper, the issue of false positives may need to be considered. Submissions with fewer than 300 words have a greater likelihood of presenting a false positive. But again, while guidelines may be appropriate when considering the impact of the AI writing score, the AI writing indicator isn’t what determines that impact. Only the educator who is familiar with both the assignment parameters and the student can determine how weighty an impact that could and should be.

- Guidelines for usage of AI writing tools. Some assignments may specify that the use of generative AI writing tools is unsanctioned or that they can only be used in certain ways, and these are important factors to consider as well when speaking to students about their work.

- Student needs. While the above mentioned variables would remain similar for an assignment, the greatest variable that must be factored into this decision-making is the student. Not penalizing students with exceptionalities for using tools that are documented to address their particular circumstances is paramount. A student who is an English language learner might be permitted to use a translation tool powered by AI that flags some or all of the submitted work as AI-generated. Not considering that this might unfairly penalize the student while also potentially violating the terms of their allowable accommodations.

While this is not an exhaustive list of exceptionalities or other factors that might impact an educator’s judgment about a student’s AI writing score, the educator’s knowledge of the student and the application of that knowledge is essential to making a fair and reasonable determination. As always, when there is any doubt as to whether academic misconduct has occurred, erring on the side of caution is best.

What factors influence whether the AI writing score merits a conversation?

As with any tool, the success of Turnitin’s AI writing indicator largely depends on how it’s used. When educators look at the AI writing score and utilize it as a single data point rather than a definitive response, then it is being used as intended. No tool can replace the educator’s judgment combined with other data points to determine whether such a conversation is needed.

As education leaders approach these important decisions with vigilance and care, it's important to consider different reasons scores may vary and how they might be addressed. Let's discuss a possible way to demonstrate the thinking and decision-making process in the next section.

Why is an AI writing score of __ ok for Student A, but not appropriate for Student B or Student C?

Because educators know their students, their work, and any special circumstances or needs, this knowledge supersedes an arbitrary score except as a possible starting point.

Assume that I have been building relationships with these students all semester. For the sake of this example assignment, the use of AI was allowable as a brainstorming tool and to help with writing a thesis statement if needed. These guidelines, including the expectation that AI tools and other resources be cited, were communicated with the rest of the parameters when introducing the assignment. Although this is not my first conversation about writing with these students, it is the first one about the AI writing indicator and score, and so I want to prepare for the conversation so that all participants leave the conversation satisfied with the outcome.

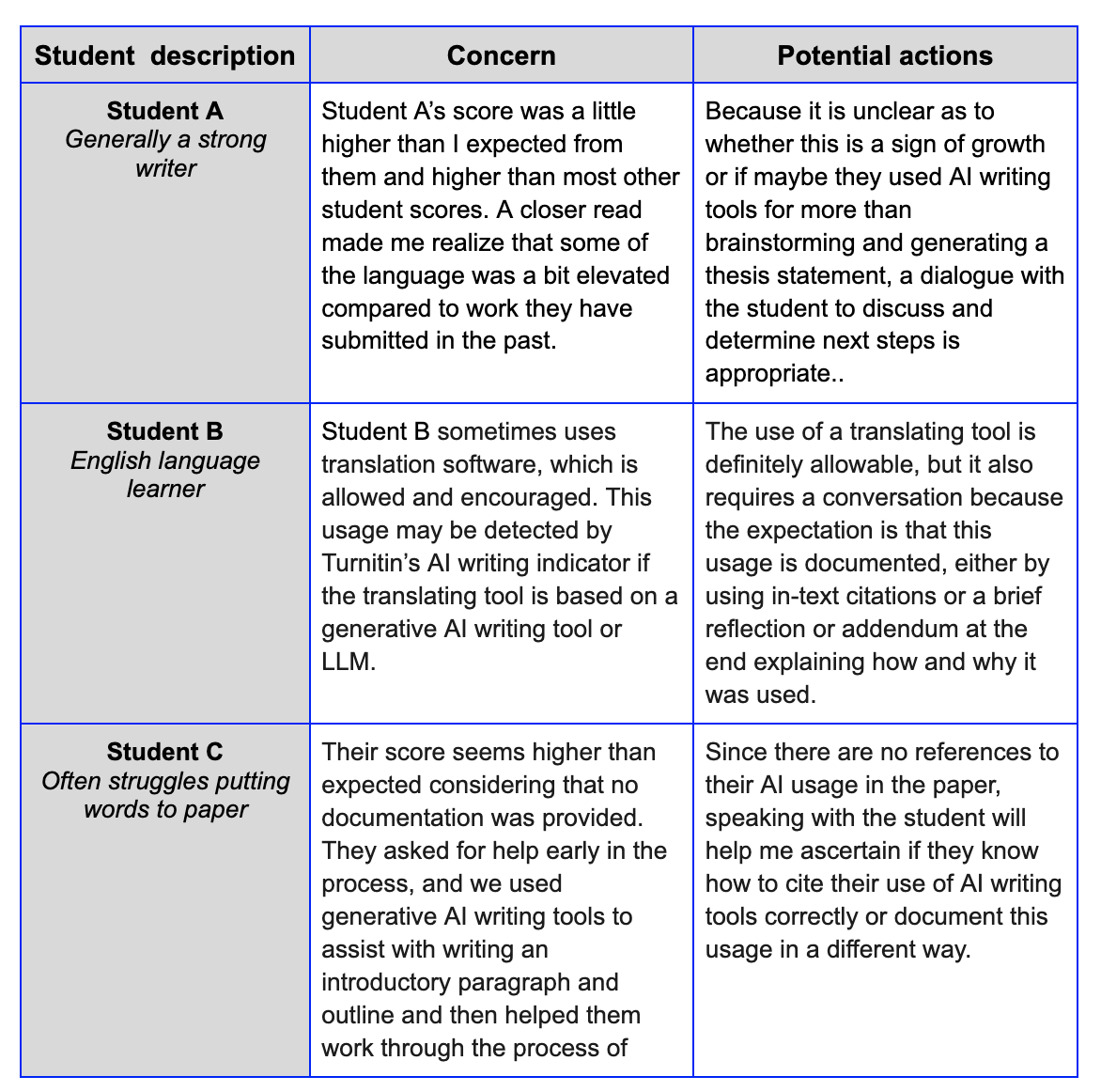

Let’s take a look at some of my students: Student A, Student B, and Student C. Each has unique characteristics that factored into the decision to have a deeper conversation. My expectation was that there would be some variance in scores, but that they would also fall along certain expected levels. This was true for most students, but not these three, who received notably higher AI writing scores on the assignment.

In the table below, three student profiles are coupled with the specific concern that gives greater context to their AI writing score. The potential solution column outlines my thought-process as an educator or decision-maker, offering potential next steps with that student’s profile and particular concern in mind.

As highlighted above in the “Potential solution” column, a conversation is needed with all three students in order for clarification and perhaps to suggest some adjustments. While I would not expect that all students will have the exact same score or even fall within a range of scores, the main point of these conversations is to focus on writing and to ensure that the students are supported when using generative AI writing tools so that their writing showcases their own original thinking.

How can educators use the AI writing indicator to facilitate good writing instruction?

Acknowledging that the tools are there and sharing when/why/how generative AI writing tools can be used as an aid (never a substitute!) is important to students’ writing journeys. It seems obvious that AI writing tools are here to stay. Regardless of whether this innovation is positive or negative in everyone’s mind, it seems likely that generative AI will be used by students post-secondary-school, whether they go to university or into trade or some other aspect of the workforce.

Students use AI writing tools for a variety of reasons, but when the discussion is focused on solutions rather than misuse, then the opportunity for learning is centered. The idea of intentional misuse or not becomes less important than learning how to “fix” it. Helping students manage time more efficiently vs copying and pasting an entire essay; teaching in-text citations vs just inserting another writer’s or bot’s words; teaching paraphrasing vs … there are as many reasons students use generative AI writing tools as there are students and–just as importantly–solutions that maintain the student’s academic integrity.

When the AI writing score is used to inform conversations about student writing in the formative space, then these conversations become part of the process and support that students often need to improve their writing. The score is a good starting point for discussing how to use these tools while still maintaining the integrity of their work. Talking to students about their process and figuring out together how to respond to the challenges presented by writing when generative AI seems easier is important to helping students improve their writing.

In sum: Interpreting Turnitin’s AI writing score

Turnitin’s AI writing indicator provides a percentage and a report with highlighted text that indicates the text was likely generated using AI writing tools… and that’s really it. What it is not is a magic button that provides definitive answers in isolation. More important than any tool is the educator who sees the score and makes decisions balancing this information with their personal knowledge of their students, their work, and institutional policy.